The future of forensics: How AI can transform investigations

A look at the potential applications and limitations of AI in forensics.

While TV dramatizations of crime scene investigations and court trials are considered a major influence of the increased demand for forensic evidence in the courtroom, technology may also be at the core of these expectations. The more sophisticated the technology that jurors have in their lives, the higher their expectations and demands are for forensic evidence, former judge Donald E. Shelton said.

As artificial intelligence and machine learning weave their way into a range of industries, forensics experts feel an urgency to evolve with technology.

At the recent Harnessing AI for Forensic Science Symposium, hosted by RTI International with support from the National Institute of Standards and Technology and the Johns Hopkins University Data Science and AI Institute, at the Johns Hopkins University Bloomberg Center, Shelton and other experts from across the sector explored the potential use cases of AI in forensics along with the oversight and best practices needed to ensure machine learning models are leveraged responsibly for investigations.

3 ways AI can support forensics

Forensics experts say AI can be deployed similarly to the way other sectors are using it: to try to identify patterns and use predictive models to improve processes and reduce uncertainty. This can be applied across the forensics lifecycle to help labs monitor the way they handle evidence, creating more transparency and accountability around those decisions, according to Daniel Katz, director of the Maryland State Police Forensic Sciences Division.

- Resource allocation

Lab managers, for example, can use predictive modeling on past case data to estimate how long each case will take based on its characteristics. With this information, they can better understand the staffing and equipment needs of each case. This can also provide a data-driven needs assessment to justify more funding or additional personnel.

- Case and evidence prioritization

As crime labs face substantial backlogs and growing caseloads of varying difficulty, lab directors see an opportunity to leverage machine learning to automatically scan and organize cases by complexity level and evidence priority based on historical data. The initial sorting can support faster turnaround times.

Similarly, a machine learning model could analyze past evidence types and case outcomes to rank the potential usefulness of incoming evidence, helping forensic labs prioritize which types to test first.

However, all of these potential AI applications come with high risks—such as important evidence being misclassified as not worth testing. These can have life-or-death consequences for defendants and could lead to failures to hold people accountable for crimes. For these reasons, experts stressed that any AI system would need to have proven reliability and robustness before it is deployed.

- More cohesive intelligence

AI has the potential to synthesize results from forensic laboratories, which often produce findings from many kinds of evidence, such as DNA, latent prints, trace evidence. Based on those findings, AI can produce insights, prioritize leads, and suggest potential next steps for investigators using pattern recognition and inference.

Use cases like these offer “a way to reduce guesswork and build a more responsive data-driven case management system,” said Niki Osborne of The Forensic AI, which has created a use-case generator and use-case library that highlights ways AI can help forensics experts improve their workflows and processes.

Human verification as a required guardrail for AI in forensics

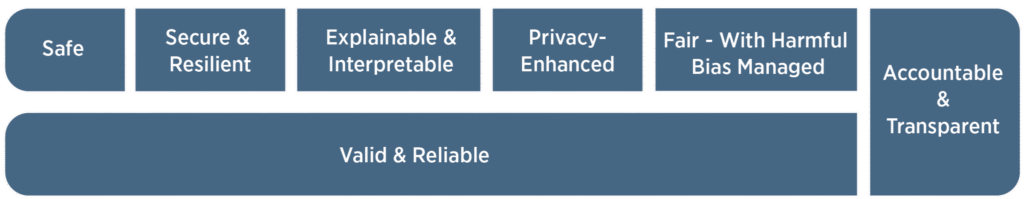

While NIST has defined the characteristics of trustworthy AI systems, experts say that the tech still requires careful human oversight, especially as forensic scientists seek to acclimate jurors, judges, and analysts in the courtroom to AI-supported forensic analysis.

Osborne also pointed to a newly released article in Forensic Science International, which outlines a responsible artificial intelligence framework specifically for forensic science.

“It’s a structured way to translate AI ethics principles into operational steps for managing AI projects within forensic organizations,” she said.

Michael Majurski, research computer scientist at NIST, emphasized the need to double-check generative systems’ answers since they’re always based on the context provided to them.

“You should view generative systems, like an LLM, more as a witness you’re putting on the stand that has no reputation and amnesia,” he said. “What it says now in this moment has no bearing on what it said in the past, and so there’s no way to trust its history of a track record.”

Additionally, as with any journey from hypothesis to conclusion, there should be an audit trail documenting the path, including all user inputs, an AI model took to reach a conclusion, according to Michael Rosenblum, professor of biostatistics at the Johns Hopkins Bloomberg School of Public Health. More specifically, Majurski said, to measure a system’s performance, it’s important to compare the context provided to the response it returns to determine whether the input and output pairing makes sense.

As the field of forensics continues to build consensus around needs for testing AI systems and guidance for AI use, it’ll also need to address a separate proficiency gap, Majurski added.

“Consensus is a better way to look at it in terms of what are the best practices in the community rather than an actual rubber stamp standard,” he said. “But the human side of that and how you interact with systems and the training required to know (not to) trust the LLM output or the objects detector output, that is a wide-open point at this moment, which we need to fix.”